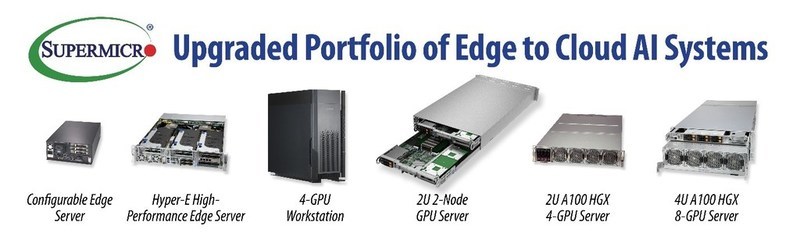

Supermicro Enhances Broadest Portfolio of Edge to Cloud AI Systems with Accelerated Inferencing and New Intelligent Fabric Support

Super Micro Computer, Inc. announces the enhancement of the broadest portfolio of Artificial Intelligence (AI) GPU servers which integrate new NVIDIA Ampere-family GPUs, including the NVIDIA A100, A30, and A2.

Supermicro’s latest NVIDIA-Certified Systems deliver ten times more AI inference performance than previous generations, ensuring that AI-enabled applications such as Image Classification, Object Detection, Reinforcement Learning, Recommendation, Natural Language Processing (NLP), Automatic Speech Recognition (ASR) can produce faster insights with dramatically lower costs. In addition to inferencing, Supermicro’s powerful selection of A100 HGX 8-GPU and 4-GPU servers delivers three times higher AI training and eight times faster performance on big data analytics compared to previous generation systems.

“Supermicro leads the GPU market with the broadest portfolio of systems optimized for any workload, from the edge to the cloud,” said Charles Liang, president, and CEO of Supermicro. “Our total solution for cloud gaming delivers up to 12 single-width GPUs in one 2U 2-node system for superior density and efficiency. In addition, Supermicro also just introduced the new Universal GPU Platform to integrate all major CPU, GPU, and fabric and cooling solutions.”

The Supermicro E-403 server is ideal for distributed AI inferencing applications, such as traffic control and office building environmental conditions. Supermicro Hyper-E edge servers bring unprecedented inferencing to the edge with up to three A100 GPUs per system. Supermicro can now deliver complete IT solutions that accelerate collaboration among engineering and design professionals, including NVIDIA-Certified servers, storage, networking switches, and NVIDIA Enterprise Omniverse software for professional visualization and collaboration.

“Supermicro’s wide range of NVIDIA-Certified Systems are powered by the complete portfolio of NVIDIA Ampere architecture-based GPUs,” said Ian Buck, vice president and general manager of Accelerated Computing at NVIDIA. “This provides Supermicro customers top-of-the-line performance for every type of modern-day AI workflow —- from inference at the edge to high-performance computing in the cloud and everything in between.”

Supermicro’s powerful data center 2U and 4U GPU (Redstone, Delta) systems will be the first to market supporting the new Quantum-2 InfiniBand product line and the BlueField DPUs. The NVIDIA Quantum-2 InfiniBand solution includes high-bandwidth, ultra-low latency adapters, switches and cables, and comprehensive software for delivering the highest data center performance, which runs across the broad Supermicro product line.

The Quantum-2 InfiniBand-based systems will provide 400 Gb/s, 2X higher bandwidth per port, increased switch density, and 32X higher AI acceleration per switch than the previous generation of InfiniBand communication adapters and switches and offer both Intel or AMD processor support.

With hybrid work environments becoming the norm, new technologies are required to ensure a workforce’s technical parity. The combination of NVIDIA’s Omniverse Enterprise and Supermicro GPU servers will transform complex 3D workflows, resulting in infinite iterations and faster time-to-market for a wide range of innovative products. In addition, NVIDIA’s Omniverse Enterprise, and AI Enterprise on VMware for integrating AI into their enterprise workflows, are optimized and tested on Supermicro’s NVIDIA-Certified Systems, enabling geographically diverse teams to work together seamlessly.