How Artificial Intelligence Is Impacting Embedded Military Computing

By Dan Mor, Director, Video & GPGPU Product Line

Artificial intelligence (AI) has come a long way from merely carrying out automated tasks based on a data set. It is truly empowering embedded systems globally, providing ‘rational’ comput-er-based knowledge using data input. The intuitive processing capabilities of AI have served as the catalyst to propel modern systems into this new realm of high intensity computing using real time data.

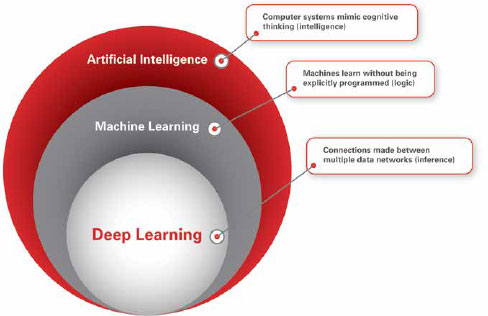

AI itself has matured and expanded into a new discipline called machine learning, where systems can learn from data inputs without being explicit-ly programmed. And taking it one step further is where we are today: deep learning…classified as a subset of machine learning. (Figure 1)

AI Evolves into Deeper Learning

Modeled after the brain’s neural networks, deep learning makes connections between multiple data networks and optimizes those connections to enable a system to make realistic assumptions according to the data. Inferences across data paths can be generated, leading to systems taking actions not previously prescribed, but rather that have been acquired through training and application of knowledge. System intelligence increases, with more accurate, logic-based decisions made more quickly over time. Leading the charge of this increased data input, processing and clarity is GPGPU (general purpose graphics processing unit) technology, which is instrumental in managing the increased computational demands generated by this new paradigm of embedded processing. Nowhere is this more applicable than in mission-critical military operations.

Critical Applications Benefit from AI-based Systems

Mission-critical systems that protect human life and require extreme precision and accuracy truly benefit from implementing an AI-based strategy, since up-to-date operational intelligence is paramount to the success and safety of modern defense initiatives. Technological improvements aimed at greater precision in weapon systems and military operations are highly regarded to ensure safety as well as more humane outcomes. When human lives will be directly impacted, completing a mission with fewer weapons expended and with less collateral damage is optimal. In addition, the use of AI-en-abled, GPGPU-based HPEC systems to remotely pilot vehicles can lessen the risk to military personnel by placing greater distance between them and danger. (Figure 2)

Non-lethal defense-related activities can bene-fit from AI as well, including logistics, base operations, lifesaving battlefield medical assistance and casualty evacuation, navigation, communication, cyber-defense and intelligence analysis, to en-sure military forces are safer and more effective. AI’s role, and that of GPGPU technology, in these critical systems is to help protect people as well as prepare for and deter attacks.

The applications that benefit from GPU accelerated computing technology are numerous. In fact, any application involved with mathematical calculation will see benefits from this technology, including:

- Image Processing:

- Enemy Detection

- Vehicle Detection

- Missile Guidance

- Obstacle Detection, etc.

- Radar

- Sonar

- Video Encoding and Decoding (NTSC/PAL to H.264)

- Data Encryption/Decryption

- Database Queries

- Motion Detection

- Video Stabilization

Advanced Intelligence Through GPGPU Processing

Real time response applications require “AI at the Edge” where processing happens at the sensors exponentially increasing computing requirements, especially for autonomous operations. Using a GPU (graphics processing unit) with a parallel architecture instead of a CPU, which is serial, reduces development time and “squeezes” maximum performance per watt from the computation engine.

As data needs continue to increase, modern em-bedded systems are faced with some serious performance issues: continuing to only use a CPU as a main computing engine would eventually choke the system. Divesting the highly demanding data calculations to the GPU, while allowing the rest of the application to run on the CPU, helps balance system abilities and resources more effectively.

GPU accelerated computing runs compute intensive portions on the GPU to accelerate the compute capabilities of a system, using less pow-er and delivering higher performance over a CPU. Through this increased power-to-performance ratio, GPU-based systems can meet the exorbitant calculation demands these applications now require.

Managing Significant Volumes of Data

With the introduction of NVIDIA’s Volta architecture comes Tensor Cores, which amplify the matrix processing of large data sets, a critical function in AI environments, by enabling higher levels of computation with lower power consumption.

Volta is equipped with Tensor Cores, each performing 64 floating-point fused-multiply-add (FMA) operations per clock. A high number of TFLOPS for training and inference applications are then delivered, enabling deep learning train-ing using a mixed precision of FP16 compute with FP32 accumulate, achieving both a 3x speedup over the previous generation and convergence to a network’s expected accuracy levels.

The Xavier NX SoM from the NVIDIA Jetson family implements a derivative of the Volta GPU with an emphasis on improving inference performance over training, making it ideal for deep learning. When the NVIDIA Jetson Xavier NX is coupled with Aitech’s rugged computing expertise, the result is an AI supercomputer like the A179 Lightning, which handles up to 21 TOPS (trillion operations per second) to provide local processing of high volumes of data closest to the sensors, where it is needed. (Figure 3) Xavier NX-based systems offer some of the most powerful processing capabilities in an ultra small form factor (SFF) system. The A179 system, for example, features 384 CUDA cores and 48 of NVIDIA’s new Tensor Cores. Using Open Source tools, developers can accomplish deep learning inference on the Xavier NX, using the two NVIDIA deep learning accelerator (NVDLA) engines incorporated into the A179 Lightning. This facilitates interoperability with modern deep learning networks and contributes to a unified growth of machine learning at scale. The system also features pre-installed Linux OS, which includes the boot loader, Linux kernel, NVIDIA drivers, an Aitech BSP, flash programming utilities and example applications.

Rugged, Ready, Reliable

All that advanced processing won’t mean a thing to a military and defense operation if the system isn’t able to withstand environmental factors and provide stable, long-term operation, so ruggedization plays a critical role in system development.

Since GPGPUs aren’t rugged at manufacture, the components must be ruggedized, which re-quires a unique expertise to ensure these GPU accelerated computing, advanced processing systems can reliably operate in remote, mobile and harsh environments. A deep understanding of how to design reliable systems for rugged environments becomes critical, including which techniques will best mitigate the effects of things like environmental hazards as well as ensure that systems meet specific application requirements.

At Aitech, for example, our AI GPGPU-based boards, AI HPEC (High Performance Embedded Computer) Systems and small form factor (SFF) AI systems are qualified for, and survive in, several avionics, naval, ground and mobile applications, thanks to the decades of expertise our team can apply to system development.

Balance Power and Performance

Managing power consumption is always a factor in a system’s development, but because GPG-PU boards process far more parallel data using hundreds or thousands of CUDA cores, it’s best to look at the positive impact of the power-to-performance ratio.

In addition, GPGPU boards are very efficient, with some boards matching the power consumption of CPU boards. So, systems obtain more processing for the same, or slightly less, power. But tradeoffs still exist between performance and power consumption. It’s just a fact that higher performance and faster throughputs require more power consumption.

But these are the same tradeoffs you find when using a CPU or any other processing unit. As an example, take the “NVIDIA Optimus Technology” that Aitech is using, which is a compute GPU switching technology where the discrete GPU is handling all the rendering and algorithmic duties. The final image output to the display is still handled by the CPU processor with its integrated graphics processor (IGP).

In effect, the CPU’s IGP is only being used as a simple display controller, resulting in a seamless, real time, flicker-free experience with no need to place the full burden of both image rendering and generation on the GPGPU or share CPU resources for image recognition across all of the CPUs. This load sharing or balancing is what makes these systems even more powerful.

When less critical or less demanding applications are running, the discrete GPU can be powered off and the Intel IGP handles both rendering and display calls to conserve power and provide the highest possible performance-to-power ratio.

Next-level Computing

So, with GPGPU-based processing, we are meeting the call for better intelligence, more intuitive computing capabilities and increased system performance. GPU accelerated computing has helped to elevate AI into new depths of learned intelligence, a world that can optimize complex, highly sophisticated computing across many industries by reliably managing higher data throughput and balancing system processing for more efficient computing operations.